Hate-Speech and Offensive Language Detection in Roman Urdu

The first extensive work on data curation and evaluation for hate speech detection in Roman Urdu.

Summary:

The task of automatic hate-speech and offensive language detection in social media content is of utmost importance due to its implications in unprejudiced society concerning race, gender, or religion. Existing research in this area, however, is mainly focused on the English language, limiting the applicability to particular demographics. Despite its prevalence, Roman Urdu (RU) lacks language resources, annotated datasets, and language models for this task. In this study, we: (1) Present a lexicon of hateful words in RU, (2) Develop an annotated dataset called RUHSOLD consisting of 10, 012 tweets in RU with both coarse-grained and fine-grained labels of hate-speech and offensive language, (3) Explore the feasibility of transfer learning of five existing embedding models to RU, (4) Propose a novel deep learning architecture called CNN-gram for hatespeech and offensive language detection and compare its performance with seven current baseline approaches on RUHSOLD dataset, and (5) Train domain-specific embeddings on more than 4.7 million tweets and make them publicly available. We conclude that transfer learning is more beneficial as compared to training embedding from scratch and that the proposed model exhibits greater robustness as compared to the baselines. (Rizwan et al., 2020)

Overview

The task of automatic hate-speech and offensive language detection in social media content is of utmost importance due to its implications in unprejudiced society concerning race, gender, or religion. Existing research in this area, however, is mainly focused on the English language, limiting the applicability to particular demographics. Despite its prevalence, Roman Urdu (RU) lacks language resources, annotated datasets, and language models for this task. In this study, we:

- First, we provide a lexicon base of 621 hateful words for the RU language.

- Second, we develop a gold-standard dataset, called Roman Urdu Hate-Speech and Offensive Language Detection (RUHSOLD), from tweets in RU with binary coarse-grained as well as multi-class fine-grained labels.

- Third, we explore the transfer learning capabilities of five existing multilingual embedding models to RU language through extensive experiments.

- Fourth, we propose a novel deep learning model called Convolutional Neural Network n-gram (CNN-gram) and compare its performance with seven baseline models on the RUHSOLD dataset. In our presentation, we demonstrate that CNN-gram displays a greater robustness across both coarse-grained as well as fine-grained classification tasks.

- Fifth, to exhibit contrast with transfer learning of embedding models, we train domainspecific embeddings called “RomUrEm” on

Dataset

First we construst our own lexicon of hateful words (by searching for such keywords online and interviewing people). this lexicon consists of abusive and derogatory terms along with slurs or terms pertaining to religious hate and sexist language. Using this lexicon along with a separate collection of RU common words, we search and collect $20, 000$ tweets and perform a manual preliminary analysis to find new slang, abuses, and identify frequently occurring common terms. The choice to add common RU words is made in order to extract random inoffensive tweets and the tweets that are offensive but do not contain any offensive words.

Using this updated lexicon we search and collect $50, 000$ new tweets. From this updated tweet base, around $10, 000$ tweets are randomly sampled for annotations. To avoid issues related to user distribution bias we restrict a maximum of 120 tweets per user.

The dataset is annotated for two sub-tasks. First sub-task is based on binary labels of "Hate-Offensive" content and "Normal/Neutral content" (i.e., inoffensive language). These labels are self-explanatory. We refer to this sub-task as “coarse-grained classification”. Second sub-task defines Hate-Offensive content with four labels at a granular level. These labels are the most relevant for the demographic of users who converse in RU and are defined in related literature. We refer to this sub-task as “fine-grained classification”. The objective behind creating two sub-tasks is to enable the researchers to evaluate the hatespeech detection approaches on both easier (coarsegrained) and challenging (fine-grained) scenarios. All labels and their definitions are summarized as follows:

- Abusive/Offensive: Profanity, strongly impolite, rude or vulgar language expressed with fighting or hurtful words in order to insult a targeted individual or group.

- Sexism: Language used to express hatred towards a targeted individual or group based on gender or sexual orientation.

- Religious Hate: Language used to express hatred towards a targeted individual or group based on their religious beliefs or lack of any religious beliefs and the use of religion to incite violence or propagate hatred against a targeted individuals or group.

- Profane: The use of vulgar, foul or obscene language without an intended target.

- Normal: This contains text that does not fall into the above categories.

Samples with translations are provided in Table 1 and the dataset statistics are provided in Table 2.

| Tweet | Translation | Target Label |

|---|---|---|

| randi ke bache tu apne hashar ki fikar kar | you son of a prostitute, you should worry for what will happen to you. | Abusive/Offensive |

| Hindu bhenchod hi ki gaand ma hi keerra hota hay Tum hindu ho hi harami tumhara kabhi 1 baap nhi hota | There are always insects in asses of Hindu sisterfu**kers. These hindus have multiple fathers instead of 1. | Religious Hate |

| No wonder you can’t make it to First Lady. At least you managed to grab the title of FIRST RANDDI | No wonder you can’t make it to First Lady. At least you managed to grab the title of FIRST PROSTITUTE. | Sexism |

| bahria central park karachi forms sold out in two days. Abhi tax maango bhenchodo ka rona shru hojayega | bahria central park karachi forms sold out in two days. Now ask them for tax these motherf**kers start crying. | Profane |

| pakistan me ptv news or ptv parliment ne hi mulk k liye acha kam kia | in pakistan, only ptv news and ptv parliment has done good work for the country. | Neutral |

| Label | Tweet Count |

|---|---|

| Abusive/Offensive | 2,402 |

| Sexism | 839 |

| Religious Hate | 782 |

| Profane | 640 |

| Normal | 5,349 |

| Total | 10,012 |

Experimental Setup

The experiments were conducted on the RUHSOLD dataset in two settings: a coarse-grained binary classification between normal and hate/offensive content, and a fine-grained five-class setup (Normal, Abusive/Offensive, Profane, Sexism, and Religious Hate). The data was split into 7,209 training tweets, 801 validation tweets, and 2,003 test tweets, with a class imbalance favoring the normal category.

We tested six types of embeddings, including LASER, ELMo, multilingual BERT, XLM-RoBERTa, FastText, and RomUrEm, the latter being domain-specific Roman Urdu embeddings trained on approximately 4.7 million tweets. For baselines, seven models were implemented: LSTM with gradient boosted decision trees, Bi-LSTM with attention, FastText with CNN, domain embeddings with CNN, ensemble classifiers combining SVM, random forest and AdaBoost, BERT with LAMB optimizer, and BERT with LASER features combined with LightGBM.

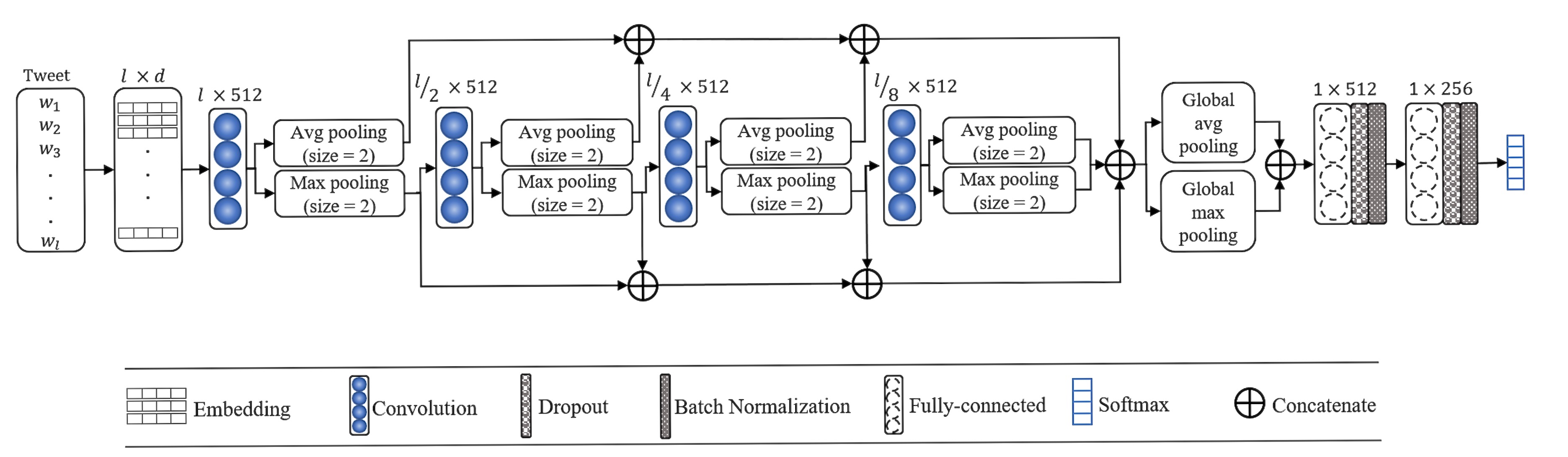

To improve upon baseline approaches we propose CNN-gram model (Figure 1), this model stacks convolutional blocks to learn unigram, bigram, trigram, and four-gram patterns, followed by pooling layers and dense layers for classification. CNN-gram was tested with BERT, XLM-RoBERTa, FastText, and RomUrEm embeddings.

Coarse-grained Classification

| Embedding | Without Fine-tuning | With Fine-tuning | ||||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-score | Accuracy | Precision | Recall | F1-score | |

| LASER | 0.74 | 0.74 | 0.74 | 0.74 | 0.76 | 0.76 | 0.76 | 0.76 |

| ELMo | 0.80 | 0.80 | 0.80 | 0.80 | 0.79 | 0.79 | 0.79 | 0.79 |

| BERT | 0.68 | 0.70 | 0.68 | 0.67 | 0.89 | 0.90 | 0.89 | 0.89 |

| XLM-RoBERTa | 0.53 | 0.27 | 0.50 | 0.35 | 0.85 | 0.85 | 0.85 | 0.85 |

| FastText | 0.74 | 0.75 | 0.73 | 0.73 | 0.88 | 0.88 | 0.88 | 0.88 |

| RomUrEm | 0.85 | 0.84 | 0.84 | 0.84 | 0.88 | 0.88 | 0.88 | 0.88 |

| Model | Accuracy | Precision | Recall | F1-score |

|---|---|---|---|---|

| LSTM+GBDT | 0.54 | 0.58 | 0.51 | 0.38 |

| BERT+LASER+GBDT | 0.89 | 0.89 | 0.89 | 0.89 |

| FastText+CNN | 0.87 | 0.87 | 0.87 | 0.87 |

| SVM+RF+AB | 0.90 | 0.90 | 0.90 | 0.90 |

| BERT+LAMB | 0.90 | 0.90 | 0.89 | 0.89 |

| Domain Embeddings+CNN | 0.88 | 0.89 | 0.88 | 0.88 |

| BiLSTM with Attention | 0.86 | 0.86 | 0.85 | 0.85 |

| BERT+CNN-gram | 0.90 | 0.90 | 0.90 | 0.90 |

| XLM-RoBERTa+CNN-gram | 0.88 | 0.88 | 0.88 | 0.88 |

| FastText+CNN-gram | 0.81 | 0.81 | 0.80 | 0.80 |

| RomUrEm+CNN-gram | 0.89 | 0.89 | 0.89 | 0.89 |

Fine-grained Classification

| Embedding | Without Fine-tuning | With Fine-tuning | ||||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-score | Accuracy | Precision | Recall | F1-score | |

| LASER | 0.66 | 0.62 | 0.42 | 0.46 | 0.67 | 0.59 | 0.52 | 0.54 |

| ELMo | 0.70 | 0.64 | 0.52 | 0.56 | 0.60 | 0.66 | 0.50 | 0.55 |

| BERT | 0.61 | 0.60 | 0.36 | 0.37 | 0.77 | 0.72 | 0.65 | 0.67 |

| XLM-RoBERTa | 0.53 | 0.11 | 0.20 | 0.14 | 0.79 | 0.70 | 0.75 | 0.72 |

| FastText | 0.62 | 0.55 | 0.33 | 0.35 | 0.77 | 0.69 | 0.63 | 0.66 |

| RomUrEm | 0.70 | 0.69 | 0.51 | 0.56 | 0.79 | 0.76 | 0.63 | 0.67 |

| Model | Accuracy | Precision | Recall | F1-score |

|---|---|---|---|---|

| LSTM+GBDT | 0.44 | 0.28 | 0.29 | 0.27 |

| BERT+LASER+GBDT | 0.73 | 0.67 | 0.63 | 0.64 |

| FastText+CNN | 0.71 | 0.62 | 0.57 | 0.58 |

| SVM+RF+AB | 0.76 | 0.68 | 0.63 | 0.65 |

| BERT+LAMB | 0.76 | 0.67 | 0.63 | 0.64 |

| Domain Embeddings+CNN | 0.74 | 0.64 | 0.60 | 0.61 |

| BiLSTM with Attention | 0.71 | 0.62 | 0.59 | 0.60 |

| BERT+CNN-gram | 0.78 | 0.69 | 0.66 | 0.67 |

| XLM-RoBERTa+CNN-gram | 0.78 | 0.70 | 0.67 | 0.68 |

| FastText+CNN-gram | 0.73 | 0.64 | 0.61 | 0.62 |

| RomUrEm+CNN-gram | 0.79 | 0.71 | 0.67 | 0.69 |

Conclusion

In this work, we presented a dataset in Roman Urdu for the task of hate-speech detection in social media content, annotated with five fine-grained labels. We also make publicly available domain-specific embeddings trained on a parallel corpora of more than 4.7 million tweets. Furthermore, an extensive experimentation with respect to multiple embeddings, their power of transfer learning, and comparison with existing baseline models is carried out. As a future research, semantically challenging cases at fine-grained level with respect to complexities of Abusive/Offensive (targeted) and Profane (untargeted) language demand further investigation.

References

2020

- EMNLPHate-Speech and Offensive Language Detection in Roman UrduIn Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, November 16-20, 2020, 2020